Thanks to Abhilash and Akash for contribution

Verification

is an art. You may google this and find scores of engineers agreeing to this.

Many of us may not be born artists. But we are engineers and we know science. We

would devise systematic approach to verification. In verification, often our

first task is to make a test plan. This

feels like having an empty canvass and not knowing where to start and what to

do with colours.

I don’t

know how to handle a canvass, but can make some progress with testplan. We will

focus on developing a testplan for module/IP level verification. We will have some

guidelines, some checkpoints that would help us stay on track.

Writing

testplan for module level (IP level) and full chip level are two different

things. There may be overlap, but both needs different approach. Full Chip

testplan is not the topic for subsequent text.

Coming to the point, we need to draw an outline

for our testplan. Let’s create separate sections to cover different aspects. I

would like to make following sections. Each section may have further subsection

and sub-sub sections.

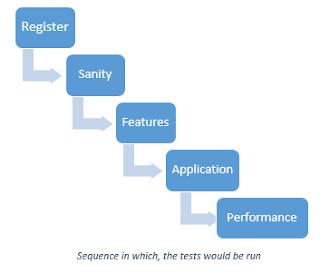

- Sanity

- Register and memory access

- Features

- Applications

- Performance

The

segregation is a not mutually exclusive. There are lot of areas overlapping with

each other. As a verification engineer, we should worry about not leaving a

hole, overlaps are fine.

Sanity

The testcases listed here aims at

basic functioning of the module. What constitutes ‘basic’ is contextual. These

are the baby steps that when perfected would form base for running of other

tests. It would be easy to illustrate with examples.

- A single correct read and write transaction

- A packet being correctly received or transmitted

The sanity testcases serve as base

indicator about the health of the IP. These testcases would be generally used /

run throughout the development process. Typically, we run sanity tests a) after

bug fixes to ensure that the fix has not broken the basics b) periodically to

test the database (RTL + TB) is compiling and running.

Sanity Tests are most directed,

quick running tests. We don’t aim to cover too many features here.

Register and memory access

Most of the

IPs have some configurations, memories and status registers. This section deals

with them. The testcases include default value checks, read and write accesses,

front door & back door read write combinations, memory depth checks, etc.

With some experience, you would realise that this section can be copy-pasted

from other modules along with tests.

It is

always beneficial to list the register and memory access testcases in the

testplan. These tests should be run before sanity tests. These tests would help

uncover the bugs in configurations and status registers. These bugs are tedious

to debug in sanity or other feature tests.

Features

These are the

very reasons for the IP or module to exist. These are the properties or functions

that the IP needs to fulfil. We need to

list all the features mentioned in IP architecture/micro-architecture document

and standard specifications. In this section we would be covering most of the

configurations that the design supports along with status and interrupts. We

need to include the register configurations, interrupts and status registers

getting triggered functionally. We will be carefully listing everything that

needs to be put to test. While listing the testcases, do not worry about

implementation of tests. Think of it as somebody else’s job 😃 .For two reasons. 1) We should not

restrict the thoughts by implementation constraints 2) We should add more details

to the test plan about the procedure, configuration sequence, status register checks,

etc. It should be easy for somebody else to implement the testplan i.e. write the

testcase.

We will divide this section into two categories.

- Simple features

- Composite feature

Simple features: These are straight forward functionalities

broken to the base level. We find these directly mentioned in the relevant

documents. Typical examples of the statements are

- Supports burst access up to 256 bytes

- Supports 24 10/100M ports

- Supports clock recovery from input stream

All above

are just examples and actual list is going to be bigger, probably the biggest

amongst all the sections.

Composite features: The functionality that constitutes of number

of simple features can be termed as composite feature. It has multiple features

involved yet can be visualized as single functionality. I like to call it alloy

features. Let’s go over some examples:

Exclusive

access – It involves exclusive read to address A, then an exclusive write to

same address A. There can be other accesses happening in between ex-read and

ex-write.

Packet

Forwarding: This one needs proper forwarding table established by learning or

configuration. We need a packet hitting the forwarding table to get forwarded

to destined port.

If above

two examples do not strike a chord, then here is vague one. Image a robot with functionality

to travel in metro. It involves multiple tasks such a buying a ticket, climbing

stairs, wait for right metro, boarding and disembarking. Having included

individual scenarios in simple features, we should verify the all the features

in conjunction with the other ones. We can club two or more features at a time.

The composite feature forms base for our application tests.

Application

These are the

functions that the IP is going to execute on field. It is very very important

to know and list application scenarios. As we being first person to verify the IP,

can reduce the on-field failure risks to great extent. I have emphasized

knowing-applications aspect in other blog Tight Rope Walker - A verification

Scenario .

It is

difficult to imagine all the end applications. So, we must seek to get the

inputs from architects, marketing, software team and everybody related to the

IP. The existing chips which have this IP (previous

version) can also serve as an input.

This

category of tests target multiple functions at a time. The goal is to create real-life

scenarios as much as possible.

An

application testcase for a switch: Getting packet from multiple interfaces with

exponential traffic distribution. The destination can be single port or

multiple ports (again depends on the application). Some packets being seen for

the first time triggering source learning.

Another

example, a core is working on custom multi-threading application. It is getting

interrupted by DMA, slow peripheral, high speed peripherals. The core gets data

from other cores, caches and main memory. The data may be needed from different

pages causing page faults intermittently.

Having

successfully tested application scenarios, we are sure of somethings that chip

would be useful in labs, despite some missed bugs. As mentioned in Chip Design

Verification: Test-plan/Coverage Plan the chip should not be DOA.

Performance tests

These are not a special category of tests. These are partly

feature partly application testcases, with more emphasis on advertised numbers.

The separate section is created to mark up the importance. The performance

parameters can be found in data sheet or some marketing material for the IP.

The examples are

Handling of incoming traffic of n Gbps, average read write

latency of n-clks, video processing capacity at 50 fps, etc.

These tests can stress test the IP. These are performed

towards the end when all the features are tested ok.

Error cases

This section lists the unhandled but possible error cases.

The handled error cases fall under features sections. We can list unhandled

error cases in this sections as the expectation is different as compared to

features tests. Since the graceful handling mechanism is not specified in the design,

the minimum expectation is not to hang on seeing these errors. The design

should be able to come to normal working in turn.

The cases could be

-

Packet arrival rate greater than supported

-

Internal memory soft corruption of single and

multiple bits

By the way, I have missed to write this section name in the

list given in beginning. Smart verification engineers would have figured that

out. That’s the bug in the spec.

Table of Contents

When we write down all the sections, I can imagine the

testplan should look something like

1.Sanity

1.1 basic read write

1.2 …

2. Register

and memory accesses

2.1 Default value

2.2 FD, BD

…

3. Feature

3.1 Transmit

3.1.1 Data path

3.1.1.1

small packets

3.1.1.2

large packets

3.1.2 Control

path

3.2 Receive

3.2.1 Data path

3.2.1.1

small packets

3.2.1.2

large packets

3.2.2 Control path

3.3 Processor

4. Application

4.1 Customer end installation

4.2 CO installation

5.

Performance

5.1 data throughput

5.2 Latency guarantees

6 Errors

6.1 High traffic

6.2 Memory errors

Do we have the required

documents?

All our efforts are in-vain if we are not having complete

information. Architecture documents are the primary source of information for

verification. Often one document is not sufficient. The architecture document

may be implicitly or explicitly referring to some standard documents, previous

IP implementations, etc. We may refer design document to find out

implementation issues. It is a good idea to read all the relevant documents and

think through the implementation of IP. If we can visualize the complete implementation,

we probably have sufficient set.

Self-Review

We would definitely go for review with designers, architects

and peers, it is important that we do it once our self. Having written the test

plan first hand, I find myself biased and running out of patience to go over

the same testplan again. Here the check list comes handy.

ð

Check that all the numbers in the documents are

put to test. Search only the numbers. e.g.

clk frequency of 125 MHz, 24 ports, latency of 16 clocks, 1000 Mbps,

etc. Identify the testcase in the testplan that is covering it. The testcase

can be in any section.

ð

All statements in the documents should be looked

at suspiciously. We put a question mark after the statement and look for

testcase in testplan that tests it. E.g. Document says, “Small packets are

dropped”. Let me test it.

ð

Configuration register – I try to map each

configuration register field with test case that verifies it.

ð

Status register – Some testcase should be

reading and checking the status during or at the end of simulation. Testcases

for configuration and status register checking does not include “Section 2 Register and memory access “

ð

Pin functionality – Ensure that we have tests

for IP’s interface pin functions

With all necessary goals set properly, we will get ready to

fire tests at design to break it to strengthen it.

Some advanced checks that can be included in test plan are, based on IP type and requirement are :

ReplyDelete1. Reset sequence checks cold/warm etc.

2. Clock checks for when PPM is supported.

3. Power down state changes in IPs that support Low power states

4. Rates changes in IPs which support post reset, configurable rate change

5. Combinations of multiple interrupts

6. Concurrency tests when multiple masters can drive data path

7. Stress tests like overflow/underflow of buffers

8. Coverage plan in terms of code and toggle coverage

9. Assertion checks for protocol and assertion coverage

10. Also NLP tests with PST coverage